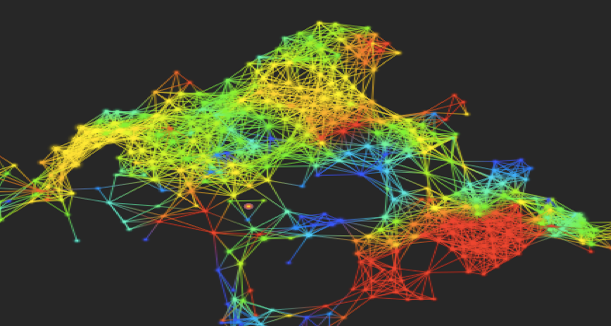

In an era of ever-increasing data complexity, traditional analysis techniques sometimes struggle to capture the intrinsic shape and connectivity of datasets. Topological Data Analysis (TDA) offers a novel approach by applying concepts from algebraic topology to reveal the underlying structure of high-dimensional data. By focusing on the “shape” of data—its holes, loops and connected components—TDA uncovers robust features that are often invisible to standard statistical methods. Professionals seeking to incorporate TDA into their toolkit can begin with a data science course, where foundational topology and persistent homology algorithms are demonstrated through practical exercises.

Understanding Topological Data Analysis

TDA rests on the principle that data points, when viewed as samples from an underlying space, form a topological space whose features can be quantified. Two central constructs in TDA are:

- Simplicial Complexes: Combinatorial structures built from vertices, edges, triangles and higher-dimensional simplices to approximate the shape of the point cloud.

- Persistent Homology: A method that tracks the creation and destruction of topological features—connected components, loops and voids—across multiple spatial scales.

By varying a scale parameter (often a distance threshold), persistent homology produces a multiscale summary of data shape in the form of persistence diagrams or barcodes. These summaries capture the prominence of each feature and are stable under noise, making TDA robust to measurement errors.

Key Concepts and Algorithms

Implementing TDA involves several algorithmic steps:

- Distance Matrix Construction: Computing pairwise distances between data points to inform complex construction.

- Complex Generation: Building Vietoris–Rips or Čech complexes at varying thresholds to approximate the topology.

- Homology Computation: Applying matrix reduction algorithms to compute Betti numbers, which count features across dimensions.

- Persistence Diagram Analysis: Visualising feature lifetimes and extracting summary statistics—such as the longest-surviving loops—that correlate with meaningful data patterns.

State-of-the-art libraries optimise these computations through sparse matrix techniques and parallel processing, allowing TDA to scale to thousands of points and higher dimensions.

Applications in Complex Data Domains

TDA has found applications across diverse fields:

- Biology and Medicine: Analysing the shape of cellular gene-expression data to identify subpopulations and developmental trajectories.

- Sensor Networks: Mapping the connectivity and coverage holes of distributed sensors for anomaly detection and network resilience.

- Finance: Uncovering market regime changes by examining the topology of asset-return spaces during periods of stress.

- Neuroscience: Characterising brain connectivity by applying persistent homology to functional MRI data, revealing differences associated with cognitive states.

In each case, topological features complement traditional metrics—such as mean and variance—by highlighting global structures that drive behaviour in complex systems.

Tools and Libraries

Several open-source packages support TDA workflows, including:

- GUDHI: A flexible C++ library with Python bindings for complex construction and persistent homology.

- Ripser.py: A highly efficient implementation of Vietoris–Rips persistence, optimised for speed on large datasets.

- scikit-tda: A Python ecosystem that integrates TDA with standard data-science tools, offering pipelines for persistence computation, diagram plotting and feature extraction.

Practitioners often consolidate their learning by enrolling in a data science course in Bangalore, which provides guided tutorials on installing these libraries, integrating TDA into machine-learning pipelines and interpreting persistence-based features in real-world case studies.

Implementation Considerations

Successfully adopting TDA requires attention to several practical factors:

- Scale Parameter Selection: Choosing a range of thresholds that captures relevant features without being overwhelmed by noise.

- Dimensionality Reduction: Preprocessing high-dimensional data with PCA or UMAP to reduce computational load while preserving global shape.

- Feature Engineering: Converting persistence diagrams into numerical descriptors—such as persistence landscapes or persistence images—for downstream learning tasks.

- Integration with ML Models: Combining topological features with classical features in classifiers or regressors, assessing incremental performance gains.

By carefully tuning these steps, data teams ensure that TDA enhances model robustness rather than adding complexity without benefit.

Training and Skill Development

Mastering TDA blends theoretical insights with coding proficiency. Key learning objectives include:

- Topology Fundamentals: Grasping homology theory, Betti numbers and nerve lemma.

- Algorithmic Implementation: Writing code for simplicial complexes and boundary operators.

- Software Integration: Employing TDA libraries within data-science frameworks like pandas and scikit-learn.

- Interpretation and Communication: Translating topological summaries into actionable insights for stakeholders.

Structured programmes—such as a comprehensive data science course—provide this layered curriculum, with capstone projects that challenge students to apply TDA to novel datasets under mentorship.

Future Outlook

As data complexity continues to grow, TDA will underpin predictive analytics pipelines, anomaly detection frameworks, and even generative models that respect global data topology. Advances in hardware acceleration and distributed computing will enable real-time homology computations, allowing organisations to track topological shifts as events unfold.

Implementation Roadmap

To integrate TDA effectively into analytics workflows, consider the following phased approach:

- Pilot Phase – Select a representative dataset with known structural features. Implement a basic persistence pipeline to validate insights against domain expertise.

- Toolchain Enablement – Introduce infrastructure—compute clusters or GPU-enabled servers—to handle complex simplicial complexes. Automate pipeline orchestration using workflow managers (e.g., Airflow, Prefect).

- Feature Integration – Develop modules to extract numerical summaries from persistence diagrams (landscapes, images) and feed them into existing machine-learning models. Conduct A/B tests to quantify performance improvements.

- Operationalisation – Containerise TDA components and deploy via Kubernetes or serverless platforms. Monitor computation latency, memory usage, and feature stability in production.

- Scaling and Governance – Expand to additional data domains, institute metadata tracking for topological features, and enforce governance policies to maintain data lineage and reproducibility.

Measuring Impact and Adoption

Organisations should track key performance indicators to assess TDA effectiveness:

- Analytics Precision Lift – Improvement in classification/regression metrics after incorporating topological features.

- Pipeline Throughput – Time to compute persistence summaries at scale, aiming for sub-second responses in real-time applications.

- User Adoption – Number of data-science teams leveraging TDA modules in their workflows.

- Decision Velocity – Reduction in time from data ingestion to actionable insight, particularly in exploratory analysis tasks.

Change Management and Cultural Integration

Embedding TDA into an organisation requires not only technology but also cultural alignment. Establish a centre of excellence to champion topological methods, host internal workshops highlighting success stories, and mentor analytics teams through hackathons and collaborative projects. Equipping analysts with intuitive visualization tools for barcodes and persistence landscapes demystifies the mathematics and fosters broader adoption.

Conclusion

Topological Data Analysis unlocks a new dimension of insight by capturing the shape and connectivity of complex datasets. From uncovering hidden cellular subtypes to ensuring sensor-network resilience, TDA complements traditional tools with robust, multiscale feature extraction. Practitioners ready to explore this frontier can build a strong foundation through a data science course in Bangalore that covers both theory and hands-on applications. With topological methods in their toolkit—reinforced by structured training—data scientists can tackle the most challenging problems in high-dimensional, complex data structures and deliver transformative insights.

For more details visit us:

Name: ExcelR – Data Science, Generative AI, Artificial Intelligence Course in Bangalore

Address: Unit No. T-2 4th Floor, Raja Ikon Sy, No.89/1 Munnekolala, Village, Marathahalli – Sarjapur Outer Ring Rd, above Yes Bank, Marathahalli, Bengaluru, Karnataka 560037

Phone: 087929 28623

Email: enquiry@excelr.com